There’s a widespread — and dangerously misleading — modern belief that quantum computing will magically solve all of today’s computational limitations. As if throwing more “firepower” at the problem would automatically overcome the logical, mathematical, and structural constraints of software systems. That’s, at best, a half-truth.

Computing isn’t just about “processing more.” Processing trillions of data points per second doesn’t help in problems where state, order, and consistency matter more than raw speed. And this becomes especially clear when we look at the nature of qubits.

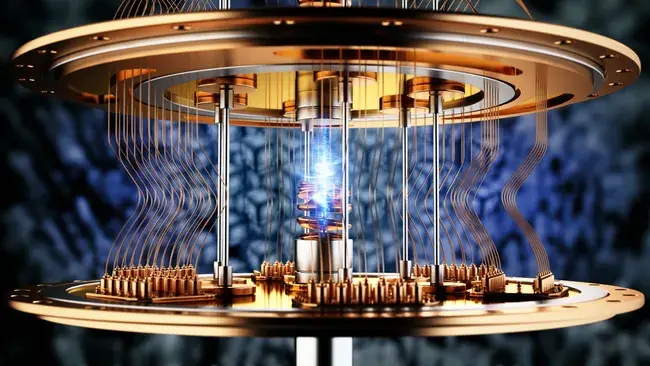

Qubits are not fast bits

Unlike classical bits (0 or 1), qubits operate in superposition — meaning they can exist in multiple states simultaneously, until they are observed. But that doesn’t mean we can control those states. On the contrary: we only deal with probabilities.

Think of it like this: imagine a TV screen made of millions of tiny LEDs flickering between on and off. Every time you look, the image isn’t fixed — it’s just the current result of all those LEDs flashing in random patterns. The repetition of this process at high speed creates an illusion of stability — similar to how a spinning helicopter blade looks motionless at full speed.

That’s what a qubit is. We don’t control its exact state — we just observe momentary collapses of potential.

Where does quantum computing actually shine?

In problems that rely on probabilistic factors, such as:

Prime factorization (which underpins most classical encryption systems);

Optimal pathfinding in complex graphs;

Massive correlation in generative AI models;

And of course, brute-force cryptographic attacks.

Why? Because superposition allows quantum processors to evaluate billions (or trillions) of possibilities simultaneously, without having to check each one individually like classical processors do.

The Real Problem: Security

Let’s look at a simple example. Encrypting the string "Hello World" using AES-256-GCM, signed with an elliptic curve key (e.g., secp256k1, used in Bitcoin), gives you an encrypted buffer of ~44–64 bytes, which contains:

A nonce (random seed);

The encrypted payload;

A GCM tag for verification.

The public key used to verify the signature is derived from the encrypted hash — and while it’s visible, the private key that generated it stays hidden, thanks to elliptic curve asymmetry.

Now let’s look at the real math behind this:

A Bitcoin private key (BIP39/BIP44) has 2,048⁴² possible combinations (24 mnemonic words, 2,048 options per word).

That’s roughly 2.9e+79 possibilities.

The key itself (as a 64-char hexadecimal) has 16⁶⁴ combinations — or: ≈ 7.9e+76

(That’s 79,228,162,514,264,337,593,543,950,336,000,000,000,000,000,000,000 combinations.)

That number is so massive that, even with every computer on Earth working non-stop, it would take billions of years to break it.

And then comes quantum.

What was once computationally impossible becomes merely highly improbable. A true quantum leap could allow billions or trillions of key attempts per second — and still wouldn’t guarantee success. We’re talking probabilities and collapses, not deterministic outcomes. And that uncertainty makes cryptographic trust itself… unstable.

Quantum computing isn’t “faster” computing — it’s a different paradigm.

Yes, it may eventually break some cryptographic models. But it won’t fix classical computing design.

Intermittent States ≠ Deterministic Computation

Due to their probabilistic nature, qubits can’t be used to write deterministic code. Their state is only revealed when observed and collapses into one among many possibilities.

Put simply: you can’t trust a loop to run exactly 10 times on a quantum CPU.

You can’t rely on an if (x === y) to behave consistently.

And that predictability is what makes computing work:

It’s what lets an OS function.

It’s what makes your database reliable.

It’s what powers your browser, your video encoder, your compiler.

All of modern computing relies on stable bits — values that follow the instructions in your code precisely.

The Physical Problem

People also forget: raw speed doesn’t matter if the infrastructure isn’t there.

A quantum CPU might, in theory, test billions of combinations per second — but:

It has no traditional RAM;

No standard bus for reading/writing;

No integration with SSDs, NVMe, PCIe, or any real-world I/O.

It’s like having a super-genius brain locked in a cave, unable to speak to the outside world.

Modern computing is an orchestra — CPU, RAM, cache, buses, storage, controllers, networking. And quantum hardware currently plays solo, out of tune with the rest.

Until a qubit can behave like a reliable byte

8 stable bits, predictable and controllable —

it will remain a statistical tool, not a practical general-purpose computing unit.

And even if we get there?

What we’d have is… just a classical processor, with more instability.

Traditional silicon chips today have billions of transistors and addressable bytes. The largest quantum chips today, theoretically, reach around 1 million "dirty" qubits — unstable, incoherent, noisy.

If you force those qubits to act like classical bytes, you just lose the benefits of quantum mechanics. And get a worse processor.

In Short: A Singular Purpose

In my opinion, the only viable use case in the near future for quantum processors is: breaking encryption.

And no — not to steal your Bitcoin.

But for something far more serious:

Accessing government data, financial infrastructure, military protocols, strategic intelligence.

Because that’s what really matters at scale.

And if a quantum "Enigma" already exists, or is built one day, you can be sure of one thing:

You won’t hear about it.

That kind of tech won’t show up on GitHub or a CES keynote.

It’ll be militarized, classified, and decades ahead of anything public — just like GPS, satellite comms, and the internet were.

Final Thought

Quantum computing, with all its mystique and hype, wasn’t built to replace classical computing. It was built for niches where binary logic fails — and those niches almost always involve cryptography, quantum simulation, and probabilistic processing.

For everything else?

Good old silicon, with its stable clocks, predictable bytes, and deterministic logic —

is still the real engine of the modern world.